Moving the data center to DDR5 may be more important than other upgrades. However, many people only vaguely think that DDR5 is just a transition to completely replace DDR4. With the arrival of DDR5, processors have inevitably undergone changes, and they will have some new memory interfaces, similar to the case of upgrading from SDRAM to DDR4 in previous generations.

However, DDR5 is not just a mere interface change; it is reshaping the concept of the processor memory system. In fact, the alterations to DDR5 might be substantial enough to demonstrate the worthiness of upgrading to compatible server platforms.

Why choose a new memory interface?

Since the advent of computers, computational problems have become increasingly intricate. This inevitable growth has driven not only the proliferation of more servers, expanding memory and storage capacities, and the evolution of higher processor clock speeds and core counts but also instigated shifts in architecture, including the recent adoption of decomposition and AI implementation.

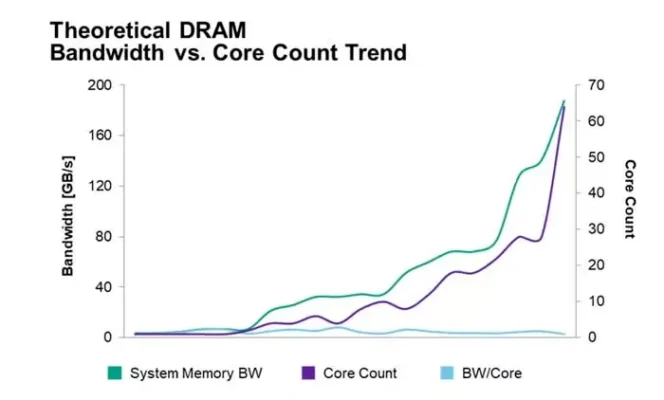

Some might perceive these to be progressing in synchrony, as all metrics are on the rise. However, while processor core counts are on the rise, DDR bandwidth hasn’t kept pace, leading to a continual decrease in bandwidth per core.

Due to the continuous expansion of datasets, especially for HPC, gaming, video encoding, machine learning inference, big data analysis, and databases, improvements in memory transfer bandwidth can be achieved by methods such as increasing the number of memory channels in CPUs, this comes at the cost of higher power consumption. Furthermore, the number of processor pins limits the sustainability of this approach, as the number of channels cannot increase indefinitely.

Certain applications, particularly high-core subsystems like GPUs and specialized AI processors, employ a high-bandwidth memory (HBM). This technology utilizes 1024-bit memory channels to swiftly move data from stacked DRAM chips to the processor, presenting an effective solution for memory-intensive applications such as AI. In these scenarios, close proximity between the processor and memory is crucial for fast transfers. However, this approach is more expensive and the chips cannot be installed on interchangeable/upgradable modules.

On the other hand, the DDR5 memory widely introduced this year aims to enhance channel bandwidth between processors and memory while still supporting upgradability.

Bandwidth and Delay OF DDR5 MEMORY

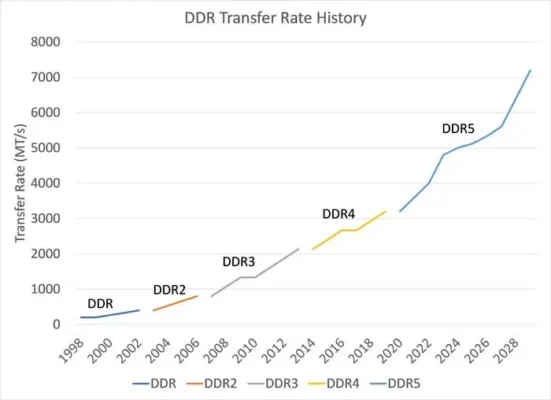

DDR5’s transfer rate is faster than any previous generation of DDR. In fact, compared to DDR4, DDR5 boasts a transfer rate of over twice the speed. DDR5 also introduces additional architectural changes to ensure that the performance of these transfer rates goes beyond simple gains, enhancing observed data bus efficiency.

Moreover, burst lengths have doubled from BL8 to BL16, providing each module with two independent sub-channels, effectively doubling the available channels in the system. This not only enables higher transfer speeds but also grants rebuilt memory channels, resulting in improved performance even without a higher transfer rate, surpassing that of DDR4.

Memory-intensive processes will see significant enhancements from the transition to DDR5. Many of today’s data-intensive workloads, especially AI, databases, and online transaction processing (OLTP), align with this description.

Transfer speed is also crucial. Currently, DDR5 memory operates within a speed range of 4800 to 6400MT/s. As technology matures, it is anticipated that the transfer rates will go even higher.

Consumption of DDR5 MEMORY

DDR5 operates at a lower voltage compared to DDR4, specifically 1.1V instead of 1.2V. While an 8% difference might not sound like much, it becomes apparent when considering power consumption in terms of square values: 1.1²/1.2²=85%. This signifies a potential 15% reduction in electricity costs.

The architectural changes introduced by DDR5 optimize bandwidth efficiency and higher transfer rates. However, quantifying these figures becomes challenging without measuring the exact application environment utilizing this technology. Nevertheless, due to the improved architecture and increased transfer rates, end-users are likely to perceive enhanced energy efficiency per bit of data.

Furthermore, DIMM modules can autonomously adjust voltage, thereby reducing the motherboard’s need for power regulation, resulting in additional energy savings.

For data centers, factors such as server power consumption and cooling costs are crucial considerations. When factoring in these elements, DDR5, as a more energy-efficient module, undoubtedly presents itself as a compelling reason for an upgrade.

Error Correction of DDR5 MEMORY

DDR5 also incorporates on-die error correction capabilities. With the continuous reduction in DRAM process size, many users have concerns about increased single-bit error rates and overall data integrity.

For server applications, on-die ECC corrects single-bit errors during the read command phase before data is output from DDR5. This offloads some ECC burden from the system correction algorithm to the DRAM, thereby lightening the load on the system.

DDR5 also introduces error detection and scrubbing functions. If enabled, DRAM devices will read internal data and write back corrected data.

Please note that the provided information is based on the description you provided and may not reflect the latest developments in the field of DDR5 technology.

Conclusion

While the DRAM interface is typically not the foremost consideration when data centers undertake upgrade implementations, DDR5 is deserving of thorough exploration. This technology holds the potential to significantly enhance performance while conserving power.

DDR5 is an enabling technology that can assist early adopters in gracefully transitioning toward future composable and scalable data centers. IT and business leaders should assess DDR5 and determine the manner and timing of the shift from DDR4 to DDR5 as part of their data center transformation initiatives.