Nowadays, AI large-scale models are becoming increasingly massive, often reaching hundreds of billions of parameters. The training process not only requires tens of thousands or even hundreds of thousands of GPU accelerator cards but also faces higher chances of errors. Meta (Facebook) recently disclosed a staggering report.

In their report, Meta revealed that training their Llama 3 model with 405 billion parameters required a cluster containing 16,384 NVIDIA H100 80GB GPUs. The process took 45 days and encountered 419 unexpected errors, averaging one error every 3 hours. Half of these errors were related to GPUs and their HBM3 memory.

Large-scale model training involves immense workloads and requires high synchronization; a single error can necessitate restarting the entire training process.

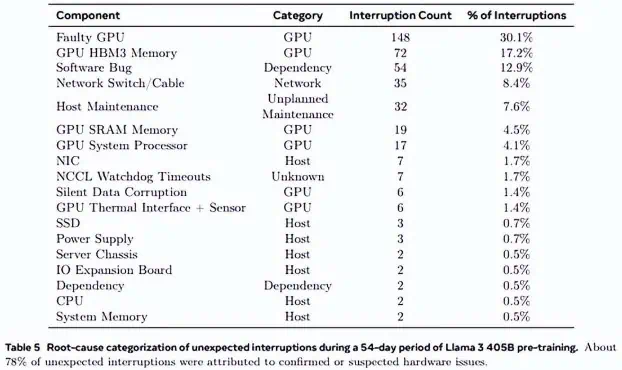

The report detailed 466 interruptions during the 45-day pre-training phase: 47 were planned for automatic maintenance, while 419 were unexpected, primarily due to hardware issues, with GPUs accounting for 58.7%. Specifically, 148 interruptions (30.1%) stemmed from various GPU failures (including NVLink bus issues), and 72 (17.2%) from HBM3 memory failures – given their significant power consumption of 700W.

Additionally, 19 interruptions were due to GPU SRAM issues, 17 from GPU processors, 6 from GPU silent data errors, and 6 from GPU cooling and sensors. Other errors originated from software bugs, network cables, and network cards.

Interestingly, CPU errors occurred only twice.

Fortunately, the Llama 3 team managed to maintain over 90% effective training time despite the high error rate, with only three GPU errors requiring significant manual intervention; the rest were managed and corrected automatically through automation.

Related:

Disclaimer:

- This channel does not make any representations or warranties regarding the availability, accuracy, timeliness, effectiveness, or completeness of any information posted. It hereby disclaims any liability or consequences arising from the use of the information.

- This channel is non-commercial and non-profit. The re-posted content does not signify endorsement of its views or responsibility for its authenticity. It does not intend to constitute any other guidance. This channel is not liable for any inaccuracies or errors in the re-posted or published information, directly or indirectly.

- Some data, materials, text, images, etc., used in this channel are sourced from the internet, and all reposts are duly credited to their sources. If you discover any work that infringes on your intellectual property rights or personal legal interests, please contact us, and we will promptly modify or remove it.