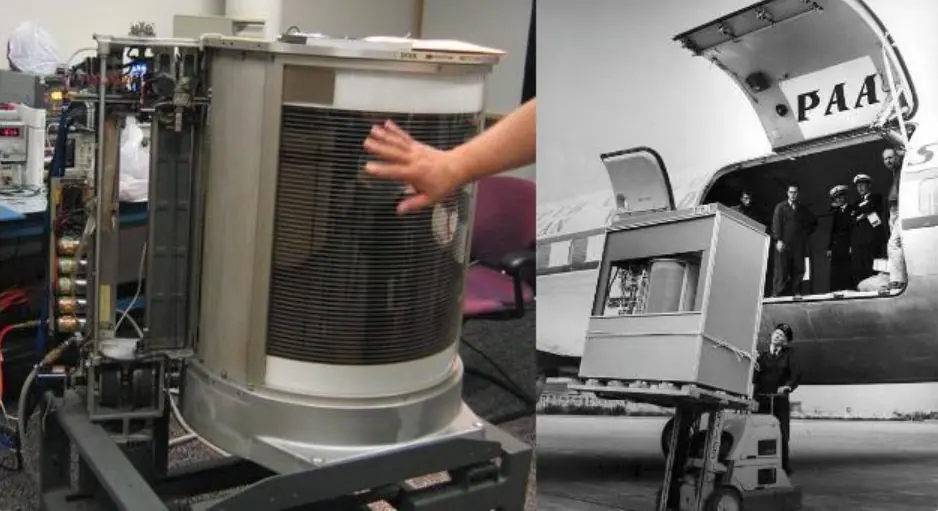

Early disks were bulky yet had very limited capacity, similar to the earliest computers. The world’s first disk was invented by IBM in 1956, and at the time, it was larger than a modern side-by-side refrigerator. Despite its massive size, its capacity was quite pitiful—only about 5MB.

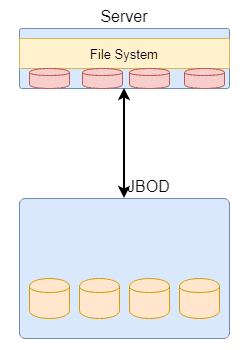

Due to its small capacity, a disk was typically only used by one computer. This is known as DAS storage. DAS stands for Direct-attached storage, which means the storage is directly connected to the computer via cables. An illustrative diagram is shown in Figure 2.

Because the device itself is large and the cable length is limited, the biggest problem with DAS storage is its dispersion in data centers. Each storage unit follows directly behind a compute node, and wherever the compute nodes are, the storage is right there. This presents a significant challenge in managing storage devices.

As shown in Figure 3, storage devices might be found on every floor of a data center, and even scattered in different areas of each floor. As business volume continues to increase, the number of storage devices naturally increases, but it’s difficult to ensure that storage devices for the same business are concentrated in the same area.

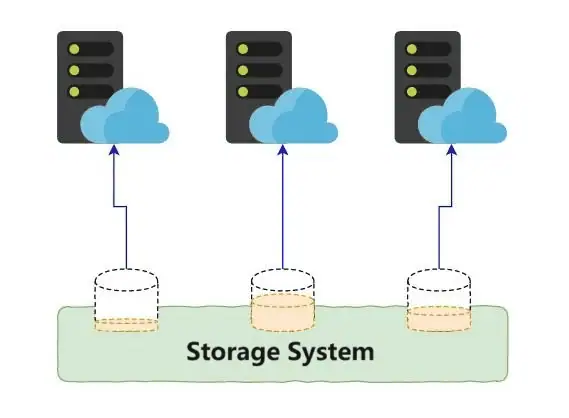

With the development of disk technology, disks have become smaller in size but much larger in capacity (Seagate’s 16TB hard drive is already on the market, which is millions of times the capacity of the first disk). After reducing in size, some companies integrate these disks into a large device, forming a storage system. The main feature of a storage system is not only to store data but also to offer many additional features such as RAID or data protection, etc., through additional software features that ensure the reliability and availability of the storage system.

Due to the huge capacity of storage devices (a storage system can usually support thousands of disks, with capacities in the PB range), they can typically meet the space needs of dozens, or even hundreds, of compute servers. However, the storage space needed by businesses is often unpredictable, so after a period, some compute nodes might have very high space utilization rates, while others might have very low. As shown in Figure 4.

At this point, administrators need to dynamically adjust storage capacity based on actual usage. For example, reducing capacity for those with low utilization rates, and expanding capacity for those with high rates to avoid exhausting storage resources.

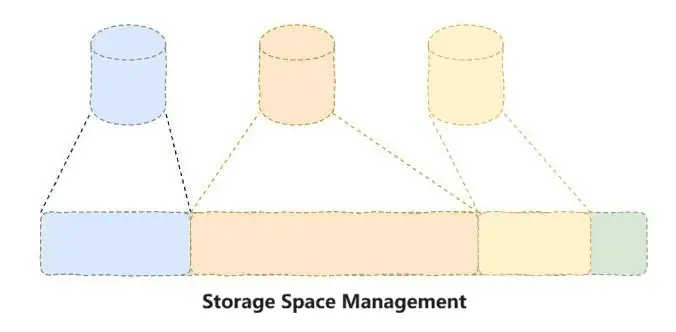

This operation is very tedious and also prone to problems. Thus, automatic thin provisioning technology has emerged. Automatic thin provisioning simplifies the management of storage capacity by storage system administrators. However, this technology is not a feature of storage system management but a feature of storage system data.

Traditional disk space management strategies involve pre-allocated space management. That is, the storage space seen by compute nodes is the actual space allocated in the storage system, irrespective of whether the compute node is using that storage space or how much of it they are using. For example, if a storage administrator allocates 1TB of space to a compute node, then 1TB of space is allocated in the storage system, and this space cannot be used by other compute nodes.

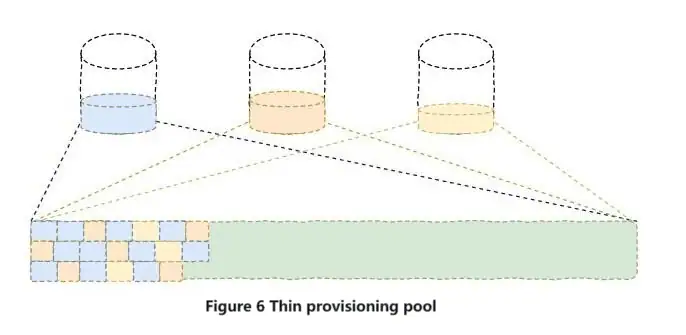

Automatic thin provisioning is another type of storage space management technique, which does not immediately allocate the divided storage space but rather delays allocation. This means the user can see 1TB of storage space, but in the storage system, only the space used—say 1GB—is consumed. As depicted, although three different storage devices are allocated a large space, the space is not immediately occupied but is allocated according to the actual use by the compute nodes.

The principle of thin provisioning is simple. It divides the storage resource pool of the storage system into very small granularity units, allocating a unit only when the compute node stores data. What the user sees is a logical space, not a physical space.

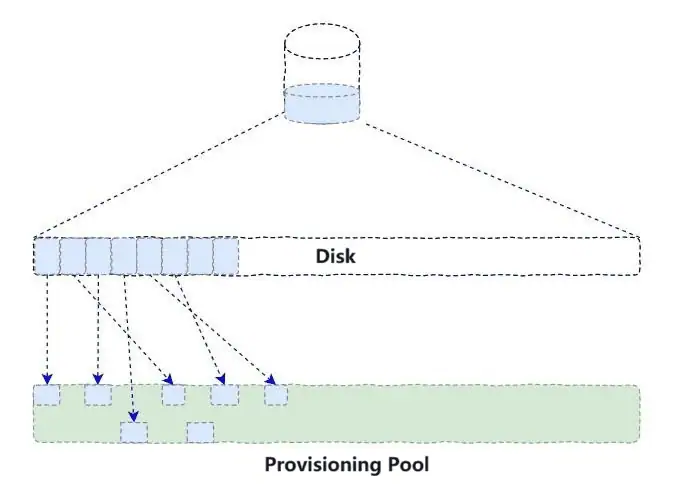

We’ll explain this in more detail. We know that disk space is a linear space, like a 1TB disk with space ranging from 0 to 1TB, similar to a very large array. We can cut the disk space into equally sized blocks, such as 8KB, and manage it at that granularity.

When storage space is divided for a compute node by the storage system, the user sees a logical space, that does not occupy actual storage resources. When the user accesses the storage device, the storage system determines whether there is corresponding physical space available based on records; if there is, it accesses that physical space; otherwise, it allocates physical space.

Considering the above ideas, when implementing, one must first have a unit for managing disk space to mark whether the logical space of the disk has corresponding physical space. Additionally, a unit must manage the resources of the storage resource pool, recording which storage spaces have been used to avoid double allocation.

The basic principle of the storage pool is simple, but the implementation is not so straightforward, mainly because various exceptional situations need to be considered for fault tolerance. Today, we mainly introduced the concept and basic principles of thin provisioning, and more details will be delved into in future issues.

Related:

- Optimal C Drive Space for Smooth PC Performance

- Discover 6 simple ways to free up iPhone storage

- Exploring Why Server CPUs Use Hundreds of Powerful Cores

Disclaimer: This article is created by the original author. The content of the article represents their personal opinions. Our reposting is for sharing and discussion purposes only and does not imply our endorsement or agreement. If you have any objections, please contact us through the provided channels.