Why do NVIDIA, AMD, and Intel always create a buzz whenever they release new products incorporating AI? What makes NVIDIA’s GPU so special?

NVIDIA emphasizes that its products can accelerate AI training and inference, making AI technology more widespread. For millennials, AI is an integral part of life. We know that computers can be AI, robots can be AI, and even movies often revolve around AI. Although we encounter it frequently in our daily lives, AI remains a technology that is both familiar and yet somewhat alien.

AI, which stands for Artificial Intelligence, refers to the intelligence exhibited by machines created by humans (usually referring to robots or computers). It involves using computer programs to simulate human intelligence, achieving or imitating human thought processes, behavioral patterns, decision-making capabilities, and concepts such as perception, learning, logical reasoning, statistical probabilities, error correction, and even taking action.

01

When AI Meets Servers: Igniting a Futuristic Boom

‘Which server are you on?’ This question, frequently asked in games, is one we are very familiar with. It also provides a general explanation of what a server is. Taking game servers as an example, a server is an enterprise-level computer that caters to specific users, offering services such as gameplay. In addition to being known for high performance, security, and stability, servers can have different functionalities based on user needs.

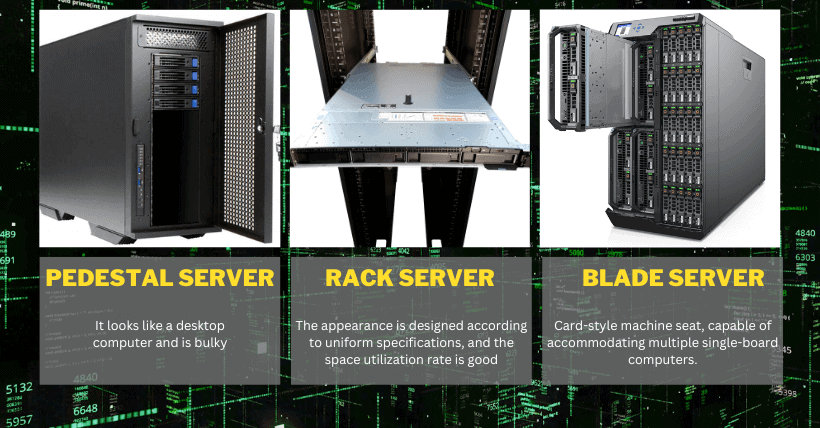

Furthermore, servers consist of hardware components like motherboards, processors, and memory. Unlike regular computers, servers often feature multiple motherboards arranged together, resulting in a larger physical size. They come in various forms, including larger pedestal servers, space-efficient rack servers, and compact blade servers.

On the software side, servers require an operating system similar to Windows Server used in desktop computers, but with enhanced performance, and capabilities to handle multiple simultaneous connections and run multiple applications smoothly.

① AI Server

AI servers, in essence, involve the application of AI technology on servers to enhance computational speed and efficiency. AI servers often utilize a ‘heterogeneous architecture’ – combining various types of processors or computing components within a computing system to meet the demands of different workloads. This is because AI workloads typically require extensive numerical calculations and parallel processing.

While traditional CPUs excel in general computing tasks, they may fall short in tasks like deep learning that involve extensive matrix calculations. In such cases, combining heterogeneous processors like GPUs and TPUs (which integrate different types of processor cores or computing units on the same chip) can better meet the requirements of AI tasks, providing enhanced computational capabilities and expanding their ability to recognize more objects and phenomena.

As AI applications gradually become more widespread, demand is on the rise. According to research firm TrendForce’s estimates, AI server shipments, including those with GPUs, FPGAs, ASICs, and more, could approach 1.2 million units in 2023, marking a 38.4% year-on-year increase. They are expected to account for nearly 9% of the overall server shipments, increasing to an estimated 15% by 2026. TrendForce has also revised its forecasts for AI server shipments from 2022 to 2026, with a projected compound annual growth rate of 22%.

However, due to insufficient CoWoS packaging process capacity, there’s a shortage in the supply of GPUs for AI servers. TSMC, a major player in CoWoS packaging, has been facing significant supply-demand imbalances in its related production lines. Given the rapid growth in AI demand in the market, TSMC has decided to expand its CoWoS packaging process capacity ahead of schedule in the Hsinchu and Southern Taiwan Science Parks, along with placing additional purchase orders with equipment suppliers.

02

The Core of AI Servers: What Types of Chip Processors Are There?

As AI servers become increasingly prevalent, internal chip processors play a pivotal role, igniting what can be described as a new chip war. Intel primarily focuses on CPUs, and AMD excels in CPUs and APUs, while also gradually venturing into the GPU market. NVIDIA, on the other hand, dominates with its powerful GPUs. Each major manufacturer has its specialty, aiming to establish a strong presence in the AI market. Here is an introduction to the relevant processors:

① CPU (Central Processing Unit)

As the primary component of a computer, the CPU is responsible for processing information, controlling operations, and executing system instructions and data. These instructions come from the computer’s operating system, applications, or user programs. Without a CPU, a computer cannot even perform the most basic startup process.

② GPU (Graphics Processing Unit):

A GPU, like a CPU, is a processor within a computer or server. However, its role is distinct. CPUs have complex architectures and are more general-purpose, while GPUs employ parallel computing architecture, which allows for the simultaneous execution of many instructions. This architecture is simpler, and GPUs typically have more cores, making them well-suited for handling large-scale, highly parallel tasks while focusing on vast amounts of data. The application of GPUs has extended to numerous other fields, including scientific computing, artificial intelligence, machine learning, and cryptocurrency mining.

③ Display Card

A graphics card, also known as a graphics or video card, is a device used to connect a monitor and convert graphic or image data into a visible display. Graphics cards utilize their GPU to process and accelerate these image calculations, transmitting the processed images to the connected monitor, and allowing users to see graphics, videos, and games.

The core component of a graphics card is typically the GPU, which is responsible for handling all computations related to images and graphics. Graphics cards can be categorized into the following two types:

Integrated Graphics (iGPU – Integrated Graphics Processing Unit)

Integrated graphics are display cores integrated within the main processor (CPU) or chipset and share system memory (RAM). They are not standalone hardware devices but are sold as part of the CPU or chipset, and therefore, their performance is limited by memory bandwidth and CPU performance. They offer lower performance and are typically suitable for basic graphics tasks, video playback, and light gaming.

Discrete Graphics Card

Discrete graphics cards are standalone hardware devices, sometimes referred to as GPUs, and are typically inserted into slots on the motherboard. They operate as separate units from the CPU and have their dedicated graphics memory (VRAM). They usually offer higher graphics processing performance, particularly for graphics-intensive applications like gaming and 3D modeling. However, they also consume more power and generate more heat. Therefore, computers using discrete graphics cards may require better cooling systems and higher power consumption.

Accelerated Processing Unit (APU)

The APU concept integrates functions, like the AMD APU, which combines a CPU and a GPU. This integration reduces the computer’s size and improves airflow for cooling, but the performance may not necessarily surpass that of a dedicated GPU.

Tensor Processing Unit (TPU)

An addition, TPU is a specialized processor developed by Google, designed specifically to accelerate artificial intelligence (AI) computing tasks. TPUs are primarily optimized for AI applications such as machine learning and deep learning, differing from “general-purpose processors” like CPUs, GPUs, and APUs.

03

AI Servers: Beyond Depth, a Need for Speed

Having a good chip processor enables us to tackle a vast amount of data computation. However, it’s essential to pair it with efficient processing outcomes to effectively handle this information. To draw an analogy with the three primary components of networking, computing, and storage, for the most efficient operation, each component must keep pace with the speed of the others. Otherwise, it can result in performance bottlenecks.

① High Performance Computing (HPC)

According to the explanation on the GIGABYTE Technology website, HPC, or High-Performance Computing, refers to the capability of rapidly processing data or executing instructions. To achieve this goal, a significant number of CPU or GPU processors need to be harnessed.

Computing Power

Commonly measured in TOPS (Tera Operations Per Second) or TFLOPS (Tera Floating-point Operations Per Second), these units indicate how many fixed-point and floating-point operations a chip can perform in one second. The larger the number, the faster the processing speed. NVIDIA’s founder, Huang Renxun, has previously claimed that their products can achieve exaflops-level computing power.

| FLOPS Types | Unit | Value |

|---|---|---|

| KiloFLOPS | kFLOPS | 103 |

| megaFLOPS | MFLOPS | 106 |

| gigaFLOPS | GFLOPS | 109 |

| teraFLOPS | TFLOPS | 1012 |

| petaFLOPS | PFLOPS | 1015 |

| exaFLOPS | EFLOPS | 1018 |

| zettaFLOPS | ZFLOPS | 1021 |

| yottaFLOPS | YFLOPS | 1024 |

04

The Growing Significance of GPUs and the Diminishing Future of CPUs

NVIDIA constantly introduces new products, with a strong focus on GPU offerings. Huang Renxun, NVIDIA’s founder, has previously emphasized that the AI era has arrived, and the acceleration power led by GPUs has supplanted CPUs. Coupled with generative AI, it has ushered in a new era of computing. He mentioned, “In the past, every few years, CPU computing speed could increase by 5-10 times, but that trend has come to an end. GPUs are the new solution.”

Coincidentally, AMD has also been actively developing GPUs in recent years. In a data center and AI technology event held on June 15th, they introduced high-end GPUs like the Instinct MI300X and the Instinct MI300A, which is touted as the world’s first APU accelerator developed for AI and HPC applications. This move signifies a strong challenge to the GPU leader, NVIDIA. It seems that beyond just processing speed, GPUs have become a battleground, while the significance of CPUs has somewhat diminished.

In a simple analogy, CPUs are akin to the human brain, capable of handling various tasks, making them versatile processors. However, they may struggle with numerous, small tasks when it comes to processing speed and efficiency. Similarly, GPUs can efficiently handle a large number of simpler tasks simultaneously, making them more suitable for AI applications. They can be considered specialized processors in this context.

Recommended: